AI-powered phishing isn’t just a new buzzword; it’s a game-changer in the world of cybercrime. These advanced scams are designed to be so convincing, so personal, that they bypass our natural skepticism and even some of our digital defenses. It’s not just about catching a bad email anymore; it’s about navigating a landscape where the lines between genuine and malicious are blurring faster than ever before. For everyday internet users and small businesses alike, understanding this evolving threat isn’t just recommended—it’s essential for protecting your digital life.

As a security professional, I’ve seen firsthand how quickly these tactics evolve. My goal here isn’t to alarm you, but to empower you with the knowledge and practical solutions you need to stay safe. Let’s unmask these advanced scams and build a stronger defense for you and your business.

AI-Powered Phishing: Unmasking Advanced Scams and Building Your Defense

The New Reality of Digital Threats: AI’s Impact

We’re living in a world where digital threats are constantly evolving, and AI has undeniably pushed the boundaries of what cybercriminals can achieve. Gone are the days when most phishing attempts were easy to spot due to glaring typos or generic greetings. Today, generative AI and large language models (LLMs) are arming attackers with unprecedented capabilities, making scams incredibly sophisticated and alarmingly effective.

What is Phishing (and How AI Changed the Game)?

At its core, phishing is a type of social engineering attack where criminals trick you into giving up sensitive information, like passwords, bank details, or even money. Traditionally, this involved mass emails with obvious red flags. Think of the classic “Nigerian prince” scam, vague “verify your account” messages from an unknown sender, or emails riddled with grammatical errors and strange formatting. These traditional phishing attempts were often a numbers game for attackers, hoping a small percentage of recipients would fall for their clumsy ploys. Their lack of sophistication made them relatively easy to identify for anyone with a modicum of cyber awareness.

But AI changed everything. With AI and LLMs, attackers can now generate highly convincing, personalized messages at scale. Imagine an algorithm that learns your communication style from your public posts, researches your professional contacts, and then crafts an email from your “boss” asking for an urgent wire transfer, using perfect grammar, an uncanny tone, and referencing a legitimate ongoing project. That’s the power AI brings to phishing—automation, scale, and a level of sophistication that was previously impossible, blurring the lines between what’s real and what’s malicious.

Why AI Phishing is So Hard to Spot (Even for Savvy Users)

It’s not just about clever tech; it’s about how AI exploits our human psychology. Here’s why these smart scams are so difficult to detect:

- Flawless Language: AI virtually eliminates the common tell-tale signs of traditional phishing, like poor grammar or spelling. Messages are impeccably written, often mimicking native speakers perfectly, regardless of the attacker’s origin.

- Hyper-Personalization: AI can scour vast amounts of public data—your social media, LinkedIn, company website, news articles—to craft messages that are specifically relevant to you. It might mention a recent project you posted about, a shared connection, or an interest you’ve discussed online, making the sender seem incredibly legitimate. This taps into our natural trust and lowers our guard.

- Mimicking Trust: Not only can AI generate perfect language, but it can also analyze and replicate the writing style and tone of people you know—your colleague, your bank, even your CEO. This makes “sender impersonation” chillingly effective. For instance, AI could generate an email that perfectly matches your manager’s usual phrasing, making an urgent request for project data seem completely legitimate.

- Urgency & Emotion: AI is adept at crafting narratives that create a powerful sense of urgency, fear, or even flattery, pressuring you to act quickly without critical thinking. It leverages cognitive biases to bypass rational thought, making it incredibly persuasive and hard to resist.

Beyond Email: The Many Faces of AI-Powered Attacks

AI-powered attacks aren’t confined to your inbox. They’re branching out, adopting new forms to catch you off guard.

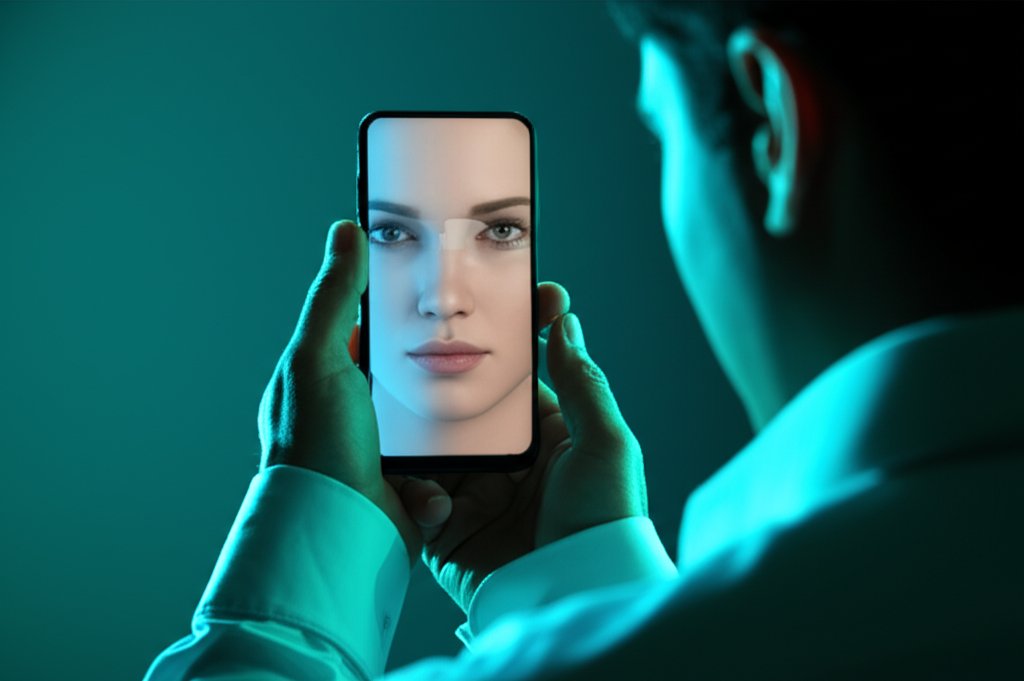

- Deepfake Voice & Video Scams (Vishing & Deepfakes): We’re seeing a rise in AI-powered voice cloning and deepfake videos. Attackers can now synthesize the voice of a CEO, a family member, or even a customer, asking for urgent financial transactions or sensitive information over the phone (vishing). Imagine receiving a video call from your “boss” requesting an immediate wire transfer—that’s the terrifying potential of deepfake technology being used for fraud. There are real-world examples of finance employees being duped by deepfake voices of their executives, losing millions.

- AI-Generated Fake Websites & Chatbots: AI can create incredibly realistic replicas of legitimate websites, complete with convincing branding and even valid SSL certificates, designed solely to harvest your login credentials. Furthermore, we’re starting to see AI chatbots deployed for real-time social engineering, engaging victims in conversations to extract information or guide them to malicious sites. Even “AI SEO” is becoming a threat, where LLMs or search engines might inadvertently recommend phishing sites if they’re well-optimized by attackers.

- Polymorphic Phishing: This is a sophisticated technique where AI can dynamically alter various components of a phishing attempt—wording, links, attachments—on the fly. This makes it much harder for traditional email filters and security tools to detect and block these attacks, as no two phishing attempts might look exactly alike.

Your First Line of Defense: Smart Password Management

Given that a primary goal of AI-powered phishing is credential harvesting, robust password management is more critical than ever. Attackers are looking for easy access, and a strong, unique password for every account is your first, best barrier. If you’re reusing passwords, or using simple ones, you’re essentially leaving the door open for AI-driven bots to walk right in.

That’s why I can’t stress enough the importance of using a reliable password manager. Tools like LastPass, 1Password, or Bitwarden generate complex, unique passwords for all your accounts, store them securely, and even autofill them for you. You only need to remember one master password. This single step dramatically reduces your risk against brute-force attacks and credential stuffing, which can exploit passwords stolen in other breaches. Implementing this isn’t just smart; it’s non-negotiable in today’s threat landscape.

Remember, even the most sophisticated phishing tactics often lead back to trying to steal your login credentials. Make them as hard to get as possible.

Adding an Unbreakable Layer: Two-Factor Authentication (2FA)

Even if an AI-powered phishing attack manages to trick you into revealing your password, Multi-Factor Authentication (MFA), often called Two-Factor Authentication (2FA), acts as a critical second line of defense. It means that simply having your password isn’t enough; an attacker would also need something else—like a code from your phone or a biometric scan—to access your account.

Setting up 2FA is usually straightforward. Most online services offer it under their security settings. You’ll often be given options like using an authenticator app (like Google Authenticator or Authy), receiving a code via text message, or using a hardware key. I always recommend authenticator apps or hardware keys over SMS, as SMS codes can sometimes be intercepted. Make it a priority to enable 2FA on every account that offers it, especially for email, banking, social media, and any service that holds sensitive data. It’s an easy step that adds a massive layer of security, protecting you even when your password might be compromised.

Securing Your Digital Footprint: VPN Selection and Browser Privacy

While phishing attacks primarily target your trust, a robust approach to your overall online privacy can still indirectly fortify your defenses. Protecting your digital footprint means making it harder for attackers to gather information about you, which they could then use to craft highly personalized AI phishing attempts.

When it comes to your connection, a Virtual Private Network (VPN) encrypts your internet traffic, providing an additional layer of privacy, especially when you’re using public Wi-Fi. While a VPN won’t stop a phishing email from landing in your inbox, it makes your online activities less traceable, reducing the amount of data accessible to those looking to profile you. When choosing a VPN, consider its no-logs policy, server locations, and independent audits for transparency.

Your web browser is another critical defense point. Browser hardening involves adjusting your settings to enhance privacy and security. This includes:

- Using privacy-focused browsers or extensions (like uBlock Origin or Privacy Badger) to block trackers and malicious ads.

- Disabling third-party cookies by default.

- Being cautious about the permissions you grant to websites.

- Keeping your browser and all its extensions updated to patch vulnerabilities.

- Always scrutinize website URLs before clicking or entering data. A legitimate-looking site might have a subtle typo in its domain (e.g., “bankk.com” instead of “bank.com”), a classic phishing tactic.

Safe Communications: Encrypted Apps and Social Media Awareness

The way we communicate and share online offers valuable data points for AI-powered attackers. By being mindful of our digital interactions, we can significantly reduce their ability to profile and deceive us.

For sensitive conversations, consider using end-to-end encrypted messaging apps like Signal or WhatsApp (though Signal is generally preferred for its strong privacy stance). These apps ensure that only the sender and recipient can read the messages, protecting your communications from eavesdropping, which can sometimes be a prelude to a targeted phishing attempt.

Perhaps even more critical in the age of AI phishing is your social media presence. Every piece of information you share online—your job, your interests, your friends, your location, your vacation plans—is potential fodder for AI to create a hyper-personalized phishing attack. Attackers use this data to make their scams incredibly convincing and tailored to your life. To counter this:

- Review your privacy settings: Limit who can see your posts and personal information.

- Be selective about what you share: Think twice before posting details that could be used against you.

- Audit your connections: Regularly check your friend lists and followers for suspicious accounts.

- Be wary of quizzes and surveys: Many seemingly innocuous online quizzes are designed solely to collect personal data for profiling.

By minimizing your digital footprint and being more deliberate about what you share, you starve the AI of the data it needs to craft those perfectly personalized deceptions.

Minimize Risk: Data Minimization and Secure Backups

In the cybersecurity world, we often say “less is more” when it comes to data. Data minimization is the practice of collecting, storing, and processing only the data that is absolutely necessary. For individuals and especially small businesses, this significantly reduces the “attack surface” available to AI-powered phishing campaigns.

Think about it: if a phisher can’t find extensive details about your business operations, employee roles, or personal habits, their AI-generated attacks become far less effective and less personalized. Review the information you make publicly available online, and implement clear data retention policies for your business. Don’t keep data longer than you need to, and ensure access to sensitive information is strictly controlled.

No matter how many defenses you put in place, the reality is that sophisticated attacks can sometimes succeed. That’s why having secure, regular data backups is non-negotiable. If you fall victim to a ransomware attack (often initiated by a phishing email) or a data breach, having an uninfected, off-site backup can be your salvation. For small businesses, this is part of your crucial incident response plan—it ensures continuity and minimizes the damage if the worst happens. Test your backups regularly to ensure they work when you need them most.

Building Your “Human Firewall”: Threat Modeling and Vigilance

Even with the best technology, people remain the strongest—and weakest—link in security. Against the cunning of AI-powered phishing, cultivating a “human firewall” and a “trust but verify” culture is paramount. This involves not just knowing the threats but actively thinking like an attacker to anticipate and defend.

Red Flags: How to Develop Your “AI Phishing Radar”

AI makes phishing subtle, but there are still red flags. You need to develop your “AI Phishing Radar”:

- Unusual Requests: Be highly suspicious of any unexpected requests for sensitive information, urgent financial transfers, or changes to payment details, especially if they come with a sense of manufactured urgency.

- Inconsistencies (Even Subtle Ones): Always check the sender’s full email address (not just the display name). Look for slight deviations in tone or common phrases from a known contact. AI is good, but sometimes it misses subtle nuances.

- Too Good to Be True/Threatening Language: While AI can be subtle, some attacks still rely on unrealistic offers or overly aggressive threats to pressure you.

- Generic Salutations with Personalized Details: A mix of a generic “Dear Customer” with highly specific details about your recent order is a classic AI-fueled paradox.

- Deepfake Indicators (Audio/Video): In deepfake voice or video calls, watch for unusual pacing, a lack of natural emotion, inconsistent voice characteristics, or any visual artifacts, blurring, or unnatural movements in video. If something feels “off,” it probably is.

- Website URL Scrutiny: Always hover over links (without clicking!) to see the true destination. Look for lookalike domains (e.g., “micros0ft.com” instead of “microsoft.com”).

Your Shield Against AI Scams: Practical Countermeasures

For individuals and especially small businesses, proactive and reactive measures are key:

- Be a Skeptic: Don’t trust anything at first glance. Always verify requests, especially sensitive ones, via a separate, known communication channel. Call the person back on a known number; do not reply directly to a suspicious email.

- Regular Security Awareness Training: Crucial for employees to recognize evolving AI threats. Conduct regular against phishing simulations to test their vigilance and reinforce best practices. Foster a culture where employees feel empowered to question suspicious communications without fear of repercussions.

- Implement Advanced Email Filtering & Authentication: Solutions that use AI to detect behavioral anomalies, identify domain spoofing (SPF, DKIM, DMARC), and block sophisticated phishing attempts are vital.

- Clear Verification Protocols: Establish mandatory procedures for sensitive transactions (e.g., a “call-back” policy for wire transfers, two-person approval for financial changes).

- Endpoint Protection & Behavior Monitoring: Advanced security tools that detect unusual activity on devices can catch threats that bypass initial email filters.

- Consider AI-Powered Defensive Tools: We’re not just using AI for attacks; AI is also a powerful tool for defense. Look into security solutions that leverage AI to detect patterns, anomalies, and evolving threats in incoming communications and network traffic. It’s about fighting fire with fire.

The Future is Now: Staying Ahead in the AI Cybersecurity Race

The arms race between AI for attacks and AI for defense is ongoing. Staying ahead means continuous learning and adapting to new threats. It requires understanding that technology alone isn’t enough; our vigilance, our skepticism, and our commitment to ongoing education are our most powerful tools.

The rise of AI-powered phishing has brought unprecedented sophistication to cybercrime, making scams more personalized, convincing, and harder to detect than ever before. But by understanding the mechanics of these advanced attacks and implementing multi-layered defenses—from strong password management and multi-factor authentication to building a vigilant “human firewall” and leveraging smart security tools—we can significantly reduce our risk. Protecting your digital life isn’t a one-time task; it’s an ongoing commitment to awareness and action. Protect your digital life! Start with a password manager and 2FA today.

FAQ: Why Do AI-Powered Phishing Attacks Keep Fooling Us? Understanding and Countermeasures

AI-powered phishing attacks represent a new frontier in cybercrime, leveraging sophisticated technology to bypass traditional defenses and human intuition. This FAQ aims to demystify these advanced threats and equip you with practical knowledge to protect yourself and your business.

Table of Contents

- What is AI-powered phishing, and how does it differ from traditional phishing?

- Why are AI phishing attacks so much more effective than older scams?

- Can AI-powered phishing attacks impersonate people I know?

- What are deepfake scams, and how do they relate to AI phishing?

- How can I recognize the red flags of an AI-powered phishing attempt?

- What are the most important steps individuals can take to protect themselves?

- How can small businesses defend against these advanced AI threats?

- Can my current email filters and antivirus software detect AI phishing?

- What is a “human firewall,” and how does it help against AI phishing?

- What are the potential consequences of falling victim to an AI phishing attack?

- How can I report an AI-powered phishing attack?

Basics (Beginner Questions)

What is AI-powered phishing, and how does it differ from traditional phishing?

AI-powered phishing utilizes artificial intelligence, particularly large language models (LLMs), to create highly sophisticated and personalized scam attempts. Unlike traditional phishing, which often relies on generic messages with obvious errors like poor grammar, misspellings, or generic salutations, AI phishing produces flawless language, mimics trusted senders’ tones, and crafts messages tailored to your specific interests or professional context, making it far more convincing.

Traditional phishing emails often contain poor grammar, generic salutations, and suspicious links that are relatively easy to spot for a vigilant user. AI-driven attacks, however, can analyze vast amounts of data to generate content that appears perfectly legitimate, reflecting specific company terminology, personal details, or conversational styles, significantly increasing their success rate by lowering our natural defenses.

Why are AI phishing attacks so much more effective than older scams?

AI phishing attacks are more effective because they eliminate common red flags and leverage deep personalization and emotional manipulation at scale. By generating perfect grammar, hyper-relevant content, and mimicked communication styles, AI bypasses our usual detection mechanisms, making it incredibly difficult to distinguish fake messages from genuine ones.

AI tools can sift through public data (social media, corporate websites, news articles) to build a detailed profile of a target. This allows attackers to craft messages that resonate deeply with the recipient’s personal or professional life, exploiting psychological triggers like urgency, authority, or flattery. The sheer volume and speed with which these personalized attacks can be launched also contribute to their increased effectiveness, making them a numbers game with a much higher conversion rate.

Can AI-powered phishing attacks impersonate people I know?

Yes, AI-powered phishing attacks are highly capable of impersonating people you know, including colleagues, superiors, friends, or family members. Using large language models, AI can analyze existing communications to replicate a specific person’s writing style, tone, and common phrases, making the impersonation incredibly convincing.

This capability is often used in Business Email Compromise (BEC) scams, where an attacker impersonates a CEO or CFO to trick an employee into making a fraudulent wire transfer. For individuals, it could involve a message from a “friend” asking for an urgent money transfer after claiming to be in distress. Always verify unusual requests via a separate communication channel, such as a known phone number, especially if they involve money or sensitive information.

Intermediate (Detailed Questions)

What are deepfake scams, and how do they relate to AI phishing?

Deepfake scams involve the use of AI to create realistic but fabricated audio or video content, impersonating real individuals. In the context of AI phishing, deepfakes elevate social engineering to a new level by allowing attackers to mimic someone’s voice during a phone call (vishing) or even create a video of them, making requests appear incredibly authentic and urgent.

For example, a deepfake voice call could simulate your CEO requesting an immediate wire transfer, or a deepfake video might appear to be a family member in distress needing money. These scams exploit our natural trust in visual and auditory cues, pressuring victims into making decisions without proper verification. Vigilance regarding unexpected calls or video messages, especially when money or sensitive data is involved, is crucial.

How can I recognize the red flags of an AI-powered phishing attempt?

Recognizing AI-powered phishing requires a sharpened “phishing radar” because traditional red flags like bad grammar are gone. Key indicators include unusual or unexpected requests for sensitive actions (especially financial), subtle inconsistencies in a sender’s email address or communication style, and messages that exert intense emotional pressure.

Beyond the obvious, look for a mix of generic greetings with highly specific personal details, which AI often generates by combining publicly available information with a general template. In deepfake scenarios, be alert for unusual vocal patterns, lack of natural emotion, or visual glitches. Always hover over links before clicking to reveal the true URL, and verify any suspicious requests through a completely separate and trusted communication channel, never by replying directly to the suspicious message.

What are the most important steps individuals can take to protect themselves?

For individuals, the most important steps involve being a skeptic, using strong foundational security tools, and maintaining up-to-date software. Always question unexpected requests, especially those asking for personal data or urgent actions, and verify them independently. Implementing strong, unique passwords for every account, ideally using a password manager, is essential.

Furthermore, enable Multi-Factor Authentication (MFA) on all your online accounts to add a critical layer of security, making it harder for attackers even if they obtain your password. Keep your operating system, web browsers, and all software updated to patch vulnerabilities that attackers might exploit. Finally, report suspicious emails or messages to your email provider or relevant authorities to help combat these evolving threats collectively.

Advanced (Expert-Level Questions)

How can small businesses defend against these advanced AI threats?

Small businesses must adopt a multi-layered defense against advanced AI threats, combining technology with robust employee training and clear protocols. Implementing advanced email filtering solutions that leverage AI to detect sophisticated phishing attempts and domain spoofing (like DMARC, DKIM, SPF) is crucial. Establish clear verification protocols for sensitive transactions, such as a mandatory call-back policy for wire transfers, requiring two-person approval.

Regular security awareness training for all employees, including phishing simulations, is vital to build a “human firewall” and foster a culture where questioning suspicious communications is encouraged. Also, ensure you have strong endpoint protection on all devices and a comprehensive data backup and incident response plan in place to minimize damage if an attack succeeds. Consider AI-powered defensive tools that can detect subtle anomalies in network traffic and communications.

Can my current email filters and antivirus software detect AI phishing?

Traditional email filters and antivirus software are becoming less effective against AI phishing, though they still provide a baseline defense. Older systems primarily rely on detecting known malicious signatures, blacklisted sender addresses, or common grammatical errors—all of which AI-powered attacks often bypass. AI-generated content can evade these filters because it appears legitimate and unique.

However, newer, more advanced security solutions are emerging that leverage AI and machine learning themselves. These tools can analyze behavioral patterns, contextual cues, and anomalies in communication to identify sophisticated threats that mimic human behavior or evade traditional signature-based detection. Therefore, it’s crucial to ensure your security software is modern and specifically designed to combat advanced, AI-driven social engineering tactics.

What is a “human firewall,” and how does it help against AI phishing?

A “human firewall” refers to a well-trained and vigilant workforce that acts as the ultimate line of defense against cyberattacks, especially social engineering threats like AI phishing. It acknowledges that technology alone isn’t enough; employees’ awareness, critical thinking, and adherence to security protocols are paramount.

Against AI phishing, a strong human firewall is invaluable because AI targets human psychology. Through regular security awareness training, phishing simulations, and fostering a culture of “trust but verify,” employees learn to recognize subtle red flags, question unusual requests, and report suspicious activities without fear. This collective vigilance can effectively neutralize even the most sophisticated AI-generated deceptions before they compromise systems or data, turning every employee into an active defender.

What are the potential consequences of falling victim to an AI phishing attack?

The consequences of falling victim to an AI phishing attack can be severe and far-reaching, impacting both individuals and businesses. For individuals, this can include financial losses from fraudulent transactions, identity theft through compromised personal data, and loss of access to online accounts. Emotional distress and reputational damage are also common.

For small businesses, the stakes are even higher. Consequences can range from significant financial losses due to fraudulent wire transfers (e.g., Business Email Compromise), data breaches leading to customer data exposure and regulatory fines, operational disruptions from ransomware or system compromise, and severe reputational damage. Recovering from such an attack can be costly and time-consuming, sometimes even leading to business closure, underscoring the critical need for robust preventive measures.

Related Questions

How can I report an AI-powered phishing attack?

You can report AI-powered phishing attacks to several entities. Forward suspicious emails to the Anti-Phishing Working Group (APWG) at reportphishing@apwg.org. In the U.S., you can also report to the FBI’s Internet Crime Complaint Center (IC3) at ic3.gov, and for general spam, mark it as phishing/spam in your email client. If you’ve suffered financial loss, contact your bank and local law enforcement immediately.

Conclusion

AI-powered phishing presents an unprecedented challenge, demanding greater vigilance and more robust defenses than ever before. By understanding how these sophisticated attacks operate, recognizing their subtle red flags, and implementing practical countermeasures—both technological and behavioral—you can significantly strengthen your digital security. Staying informed and proactive is your best strategy in this evolving landscape.